How to Get Your Pages Indexed in Google

If a page isn’t indexed by Google, it won’t show up in search results — no matter how good or relevant it is. That’s why getting your important pages indexed is one of the first and most crucial steps in SEO.

SiteGuru gives you several tools to monitor and improve indexation:

- Indexation Report – find out which pages are indexable and indexed

- Sitemap Report – make sure all key pages are included in your sitemap

- Canonical Report – verify that canonical URLs are set up correctly

- Internal Link Suggestions – improve discovery with smart linking

In this guide, we’ll walk through each of these reports and how to use them to improve your website’s visibility in Google.

Indexation Report

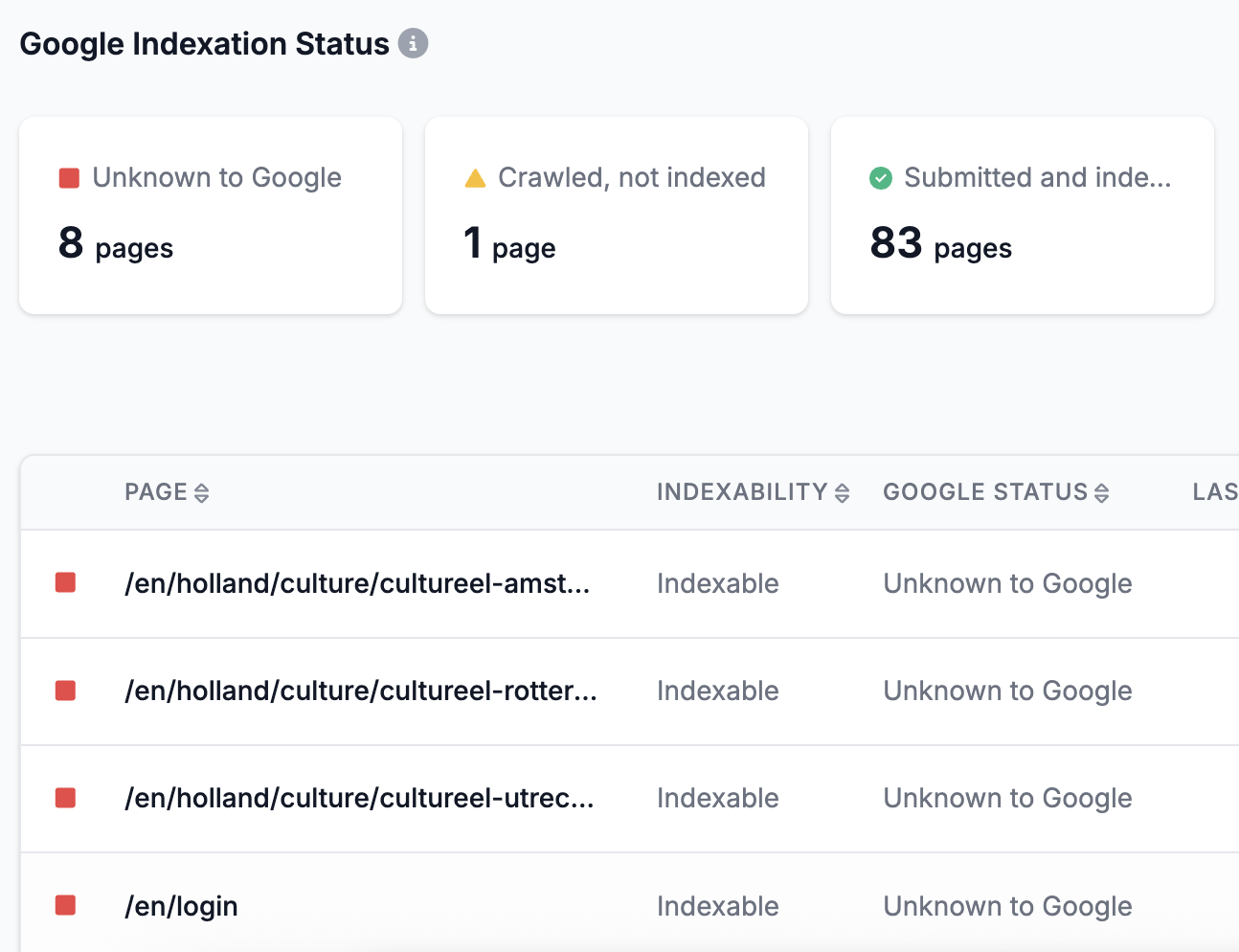

Start with the Indexation Report to get a complete overview of how Google sees your pages.

You’ll find this report in the Technical section of your SiteGuru site report.

What it shows:

- Indexable pages – These are technically allowed to be indexed (no

noindextag, robots.txt block, or conflicting canonicals). - Non-indexable pages – These pages are blocked from indexing due to one or more reasons.

- Indexed status – Even if a page is indexable, it may not be indexed yet. We check this using Google Search Console data.

- Last crawl date – See when Google last crawled a page. This helps diagnose delays or issues with discovery.

What to do with this info:

- Review non-indexable pages: Are they intentionally blocked (e.g., legal pages, admin sections)? If yes, you can click Ignore Page so they won’t clutter your report.

- Investigate indexable but non-indexed pages: If Google hasn’t indexed them yet, that could be due to:

- The page is new and hasn’t been discovered yet

- It’s low quality or duplicate content

- It’s not well linked internally

- Watch for crawl frequency: If a page hasn’t been crawled in a long time, it might need better linking, a sitemap entry, or an update.

Pro tip: Sort by “Indexable but not indexed” to prioritize your efforts.

Sitemap Report

Sitemaps are XML files that help search engines discover your pages. They’re especially useful for:

- New websites with low authority

- Deeply nested pages

- Recently added or updated content

The Sitemap Report in SiteGuru checks both the structure and content of your sitemaps.

Key sections:

- Sitemap overview – Lists all detected sitemaps, shows whether they were submitted to Google, and provides a quick link to the Search Console sitemap report (if connected).

- Missing pages – Pages that were found during our crawl but are not listed in any sitemap.

- Redirected pages – Sitemap entries that redirect to another URL.

- Broken pages – Sitemap entries that return an error (404, 500, etc.).

What to fix:

- Add missing pages: If a page should be indexed but isn’t listed in the sitemap, add it.

- Remove redirected or broken URLs: These waste crawl budget. Always point Google to the final destination directly.

- Submit your sitemap to Search Console: If it hasn’t been submitted yet, you’ll see a warning in SiteGuru. Submitting your sitemap ensures faster and more reliable indexing.

You can also download a full sitemap generated by SiteGuru and upload it to your server if your CMS doesn’t create one automatically.

Canonical Report

The canonical tag tells Google which version of a page should be considered the “original” or preferred version.

Misconfigured canonicals are a common cause of indexation issues.

The Canonical Report highlights problems like:

- Broken canonical URLs – Pages pointing to non-existent or mistyped URLs.

- Multiple canonical tags – Conflicting signals about which page should be indexed.

- Canonical mismatch – A page sets a canonical to another page, but Google indexes the current page instead.

- Google’s chosen canonical – See whether Google respects your chosen canonical or overrides it.

What to do:

- Make sure each page has only one canonical tag.

- Canonical URLs should be self-referencing unless you’re explicitly consolidating duplicate content.

- Check that canonical URLs actually load and don’t redirect or 404.

A mismatched or broken canonical can silently deindex a page. Fix these to ensure your preferred URL is shown in search results.

Internal Linking and Orphan Pages

Even if a page is technically indexable, Google may not find it if no other pages link to it. That’s where internal linking comes in.

SiteGuru’s Internal Link Suggestions tool helps you:

- Find orphan pages (pages with no internal links)

- Suggest relevant pages that can link to these orphan pages

- Improve crawlability and distribute link equity across your site

Why this matters:

- Googlebot discovers new content by following links from existing pages

- Pages without internal links may remain undiscovered or crawl very slowly

- Internal links help search engines understand content hierarchy and topic clusters

What to do:

- Go to the Orphan Pages report to find low-link or unlinked pages

- Use the Internal Link Suggestion tool to find linking opportunities from contextually relevant pages

- Add these links manually (or via CMS tools) to improve discovery

Good internal linking improves crawlability and search performance.

Still Not Indexed?

Even after fixing all the technical aspects, some pages might still not appear in Google’s index. Here’s why — and what to do.

1. It takes time

If your site is new or the page was recently created, give it some time. It can take days or weeks for Google to index new content.

2. Lack of authority

If your site has few or no backlinks, Google may crawl it less frequently. Consider:

- Building high-quality backlinks

- Getting mentioned on relevant websites

- Submitting pages directly to Search Console

3. Low-quality content

Pages that offer little value, duplicate existing content, or are poorly written may be excluded by Google. Improve your content by:

- Making it more comprehensive and original

- Answering user search intent better

- Including unique insights or media

4. Technical issues outside SiteGuru’s scope

In rare cases, server issues, rendering problems, or incorrect HTTP headers can interfere with crawling. Use tools like Search Console’s URL Inspection to see if Google encounters issues.

Summary

By using SiteGuru’s reports, you can take a systematic approach to indexation:

| Report | What to Check | What to Fix |

|---|---|---|

| Indexation Report | Pages that are not indexable or not indexed | Remove blockers or improve discoverability |

| Sitemap Report | Missing, broken, or redirected pages in sitemap | Add missing pages, fix broken links |

| Canonical Report | Incorrect or conflicting canonical tags | Ensure a clean, consistent canonical setup |

| Internal Linking | Orphan pages with no incoming links | Add internal links using suggestions |

Getting indexed isn’t magic — but with the right tools and a bit of patience, you can make sure Google sees and indexes all your important pages.